The Data Life Cycle

The ISPE Good Practice Guide on Records and Data Integrity defines a data life cycle consisting of: Creation — Processing — Review, Reporting, and Use — Retention and Retrieval — Destruction

A robust data integrity program ensures data integrity across this entire life cycle. Such a program would include a risk-based analysis of all data flows to identify, assess, mitigate, and communicate potential data integrity issues. Systems supporting the data integrity should be validated for their intended use, and boundaries for the data should be identified. Throughout the data process, data must be secured from modification or deletion by unauthorized personnel, and changes to the data must be captured in audit trails or through appropriate procedures.

Data Creation. Data is created when it is recorded from instrumentation or manually written and must be of the appropriate level of accuracy, completeness, content, and meaning – in addition to the appropriate level of ALCOA (attributable, legible, contemporaneous, original, and accurate). It should be stored in the pre-defined location and format. If the same information is generated in multiple places simultaneously (such as data in a PLC being written to a data historian), then the process owner must define where the primary data is retained.

Data Processing. All electronic data is processed in some way – either through analog to digital conversion, scaling, or zeroing – the reality is that computers do not speak the same languages that humans do. More than that, algorithms may be applied to data to summarize, average, contextualize, or transform the data. For predicate rule electronic data, these systems must be validated for their intended use, but truth be told, most of this happens in the background. Consider the calculation of F0 (pronounced F-sub-O) which is a measure of equivalent bacterial organism kill over the course of a sterilization cycle. F0 is related to the deviation of the lowest measuring temperature sensor in a set of sensors deviating from the 121.1DegC saturated steam set point across a time period. Surely such a complex data algorithm must be validated. Even so, it may still be necessary to retain the input data in the event of a deviation or investigation.

Another example of data processing involves the metadata and associated parameters used in the calculations. In this case, a large set of data collected over a time period is totaled. If the total is 100 or above, the process passes. If the total is 99.9999999999 or below, it fails. The report does not provide the total of the data, only a pass or fail indication. When the report was ran at the time of processing, the result was “pass,” but when the report was re-generated from the same data two years later, the result was “fail.” What caused the change?

Actually, the cause of the change had to do with threshold parameters related to the instrument. In the two-year time period, the existing instrument was exchanged for a new, more accurate instrument. Since the new instrument was more accurate, the threshold parameters in the data historian were adjusted correspondingly. However, when the old data was re-calculated using the new threshold parameters, the final outcome was changed just enough to fall below the pass limit.

Data Review, Reporting, and Use. Data review should determine whether predefined criteria have been met and should be based on a process understanding related to the impact on product quality. Manual reviews should be proceduralized, while electronic reviews should be validated. One example of electronic review popular in Electronic Batch Records is “review by exception.” Consider the mountains of data generated during a batch process, inclusive of the readiness, allocation, sampling, testing, QC release, staging of all materials, equipment, processes, environments, and other elements that comprise a batch. For every set of data, computers evaluate that data to known criteria, such that the final batch record consists only of those data points specifically required by predicate rules along with “exceptions,” the cases where the data did not meet the criteria. Exceptions may or may not affect product quality. Examples are things like “from 12:01:00 to 12:05:00 compaction room humidity was 71%Rh, outside the 30 – 70 %Rh acceptable range.” Ideally, rules are created over time that use product and process understanding to allow the automated systems to make better decisions as to what is and is not an exception.

However, even the most comprehensive systems will require a periodic manual review. Manual reviews must include relevant audit trails, associated metadata, and original, or “true copied” data (preserving content and meaning). In the event of dynamic data (such as data produced in High Performance Liquid Chromatography testing), the data must be examined in the context and using the tools, methods, and controls with which it was created.

Data Retention and Retrieval. Data must be readily available through the defined retention period and available in a human-readable format. If it is dynamic data, this means that applications and interpretation tools must also be maintained such that the data and corresponding results can be reproduced.

Paper records may be retained electronically, provided the copy is verified as a true copy – meaning that content and meaning is preserved. If changes are made to associated hardware or software, then the data owner should verify that the data can still be accurately retrieved.

Data retention and retrieval procedures should consider:

- Disaster recovery – inclusive of not only data, but applications, operating environments, and even specific hardware that may affect the readability of the data.

- Durability of Media – magnetic tapes and drives degrade over time and are susceptible to environmental conditions. CDs and DVDs break down in ultraviolet light and can be completely diminished in as little as seven years. Solid state systems can fail.

- Environmental controls – What would happen in the event of a server room fire? Is media stored where it can be damaged by heat, water, radiation, and the like?

- Duplicate data – Storing data across RAID arrays is a great risk mitigation strategy. Storing the same primary data on two different systems is a bad idea. Consider the company that kept one set of product specifications on their ERP and another similar set in their MES. Which was a the specification of record? And when the two sets of data were compared, where did all these differences come from?

- Encryption – Use encryption to enhance security, but ensure that controls are in place to recover encrypted data properly and completely.

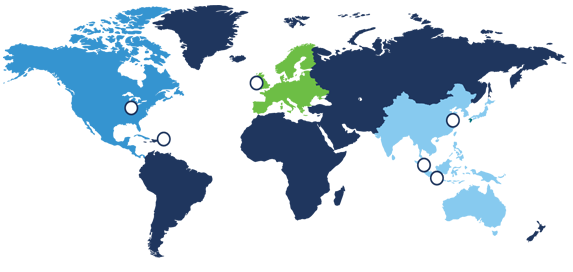

Data Destruction. After the required retention period, original data can be correctly and procedurally destroyed. But note that retention requirements may differ by jurisdiction, and different countries have differing laws related to litigation hold times. Verify that regulated records are not in a hold status to support litigation prior to destruction.

Prior to the destruction of any data, verify that the data is no longer needed or viable. Consider the company that scanned all its validation documents in order to discard the paper and keep the electronically scanned version. They were astonished two years later to discover that the same scan file had been used for all their files and just renamed, such that the original validation evidence was lost. Or consider the company that reused old backup tapes every month to perform their daily server backups. When a failure occurred, they were shocked to find that the daily backup never ran because the “system” detected that a backup was already written to the tape.

Your data is important. Do you still have questions about what to do with it? Click the button below to connect with John Hannon, our Business Area Lead for Automation and IT.

{{cta(‘fb9fdb96-d187-4210-b099-dcf820122ac7’)}}